Have you ever watched a video where a face swap was involved? On YouTube, you can find plenty of satirical videos featuring celebrity swaps like those of Jimmy Fallon and Paul Rudd, or Bill Hader and Tom Cruise. One moment you think you’re looking at someone, only to see their face change mid-video. It’s called a “deepfake.”

What Is a Deepfake?

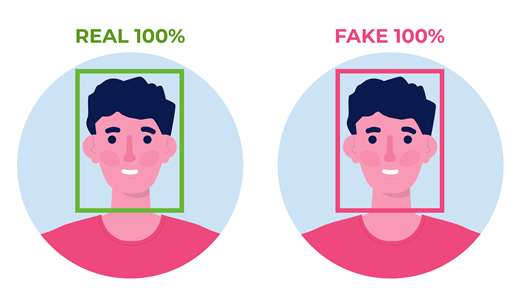

The word deepfake comes from two terms: The deep part of the word comes from the phrase “deep learning,” which is a subset of artificial intelligence dealing with formulas that can learn and make independent decisions. The term fake is fairly self-explanatory. In other words, it’s the act of taking an image and replacing it with someone else’s likeness. It may look and sound like the real thing, but it’s not. And although that technology can be used in seemingly harmless ways, it can also be dangerous.

The Danger of Deepfakes

Snapchat and its users have been using face swapping technology for years just for fun. But the harm comes when someone uses this technology without consent. And, often, this is done through pornography.

Face swapping images into pornographic material used to only be an issue for big names like Emma Watson, Natalie Portman and Wonder Woman’s Gal Gadot. But now anyone who has an image posted online is in danger of becoming a victim.

Take law graduate and activist Noelle Martin. One day, Martin received a link from an anonymous emailer. When she opened the link, she was stunned to see herself in various pornographic videos. Only it wasn’t her—just her face attached to someone else’s body. However, the videos looked entirely real.

Martin tried to contact authorities, and she even attempted to find the culprit, but she soon realized that there weren’t many laws to protect the victims of deepfakes. So, she got to work in an attempt to create some change.

Now What?

Today, there are some states and countries that have laws fighting the use of pornographic deepfakes. But the internet is a deep dark hole and when companies like Reddit and apps like Zao normalize face swapping, there are bound to be continual issues.

For parents, it’s just one more reason to teach children to pay attention to where they are posting their pictures and videos and to carefully read privacy policies. Yet, in the end, the most helpful tool will be laws that work to protect the innocent.

Recent Comments